I've been using ChatGPT Plus for many months now. Like many others, I use it for simple tasks like spell-checking and more complex ones like brainstorming. It's been great for my personal and work projects. But I wonder if the USD $20 per month fee is worth it for how often I use it. I only interact with ChatGPT a few times a week. If I used the OpenAI API, which charges as you go, I might only pay around $3 per month.

That's one big reason I wanted to set up my own ChatGPT frontend. Not only would I pay for what I use, but I could also let my family use GPT-4 and keep our data private. My wife could finally experience the power of GPT-4 without us having to share a single account nor pay for multiple accounts.

It's been a while since I did any serious web frontend work. I thought about increasing my Angular knowledge to make my own ChatGPT. I was ready for many late nights working on this. But then, in early August, I found this microsoft/azurechat on GitHub. Microsoft recently created this Azure Chat repository on July 11. Here's a quote from their README:

Azure Chat Solution Accelerator powered by Azure Open AI Service is a solution accelerator that allows organisations to deploy a private chat tenant in their Azure Subscription, with a familiar user experience and the added capabilities of chatting over your data and files.

I tried it right away. In just 4 hours, I was able to set up my own private ChatGPT using Docker, Azure, and Cloudflare. The Azure Chat docs mostly talk about connecting with Azure OpenAI Service, and this service is currently in preview with limited access. Even though, I managed to connect it to the OpenAI API, which everyone can use. In this blog post, I'll show you how to do the same.

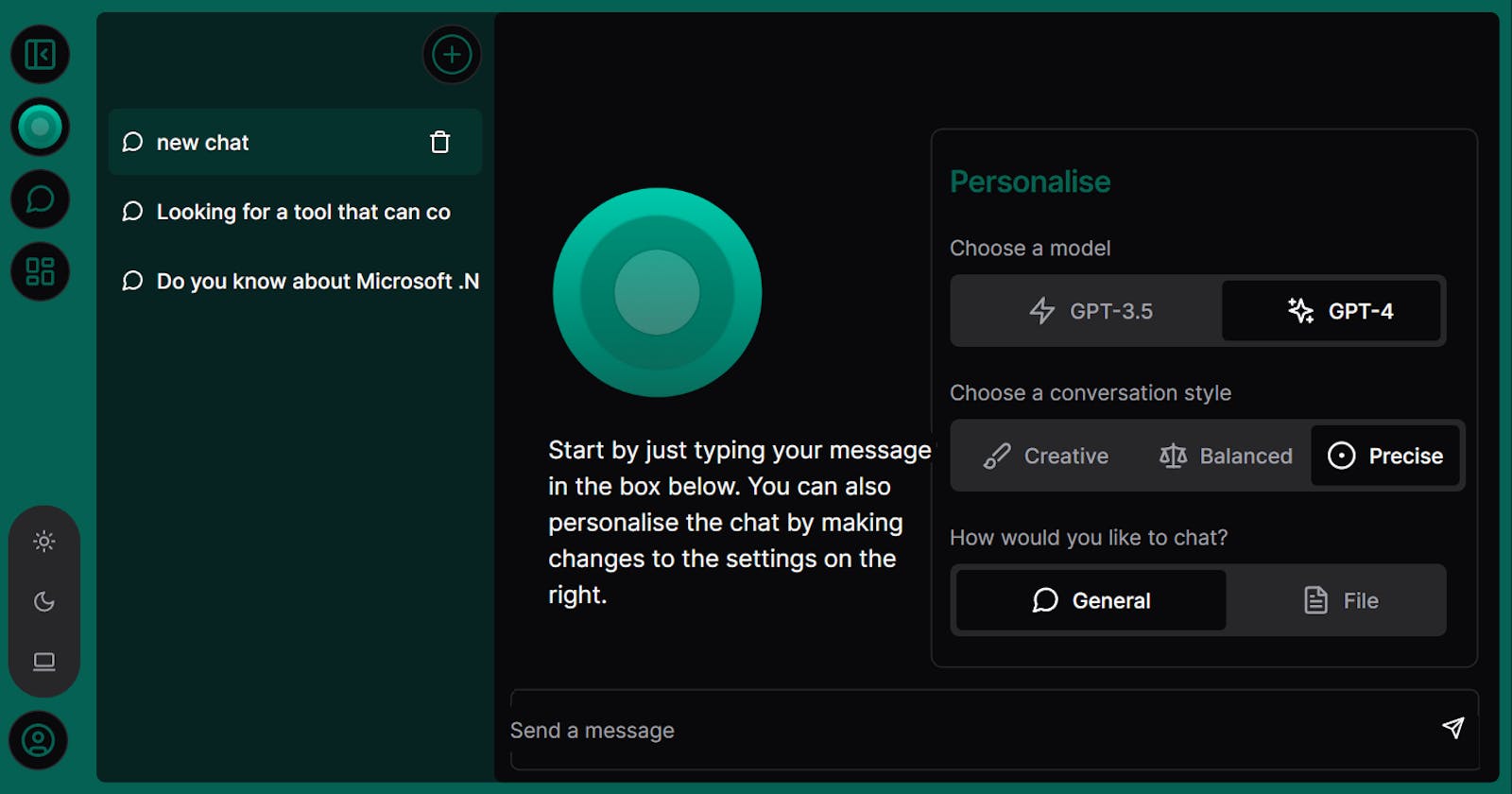

A first look to Microsoft Azure Chat

Microsoft Azure Chat is a Next.js application. By default, NextAuth.js is configured to allow users to sign in with their Microsoft or GitHub account. The chat persistence layer is tightly coupled to Cosmos DB and LangChain is used to leverage the GPT models with Azure OpenAI.

If you plan to use Azure OpenAI, and you have access to the service in preview for your Azure subscription, then you can upload a PDF file and engage in chat discussions related to the content of those files.

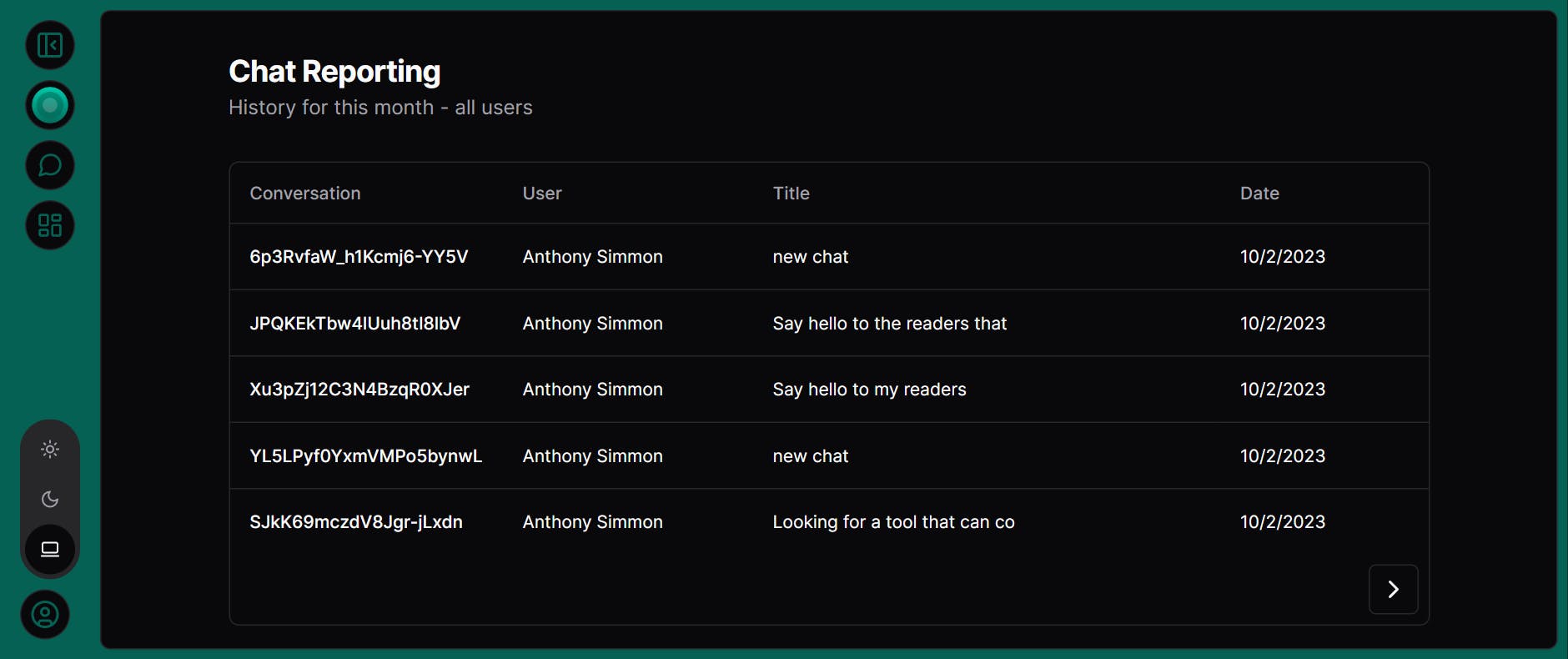

There's also a built-in chat reporting page that can be accessed by users whose email address is in a dedicated environment variable.

Replacing Azure OpenAI with OpenAI API

Because Azure Chat uses LangChain, the built-in OpenAI integration uses environment variable detection to support both Azure OpenAI and OpenAI API. This means you can simply replace these suggested environment variables:

AZURE_OPENAI_API_KEYAZURE_OPENAI_API_INSTANCE_NAMEAZURE_OPENAI_API_DEPLOYMENT_NAMEAZURE_OPENAI_API_VERSION

... with the single environment variable OPENAI_API_KEY which, as you might have guessed, must contain your API key for the OpenAI API.

Enabling the GPT-4 model in Azure Chat

By default, Azure Chat doesn't specify which GPT model to use. This means that the default GPT-3.5 model is selected. If you want to leverage GPT-4, you'll need to specify the model name when creating the ChatOpenAI instance in the backend:

const chat = new ChatOpenAI({

temperature: transformConversationStyleToTemperature(

chatThread.conversationStyle

),

modelName: chatThread.chatModel, // <-- This is the new line

streaming: true,

});

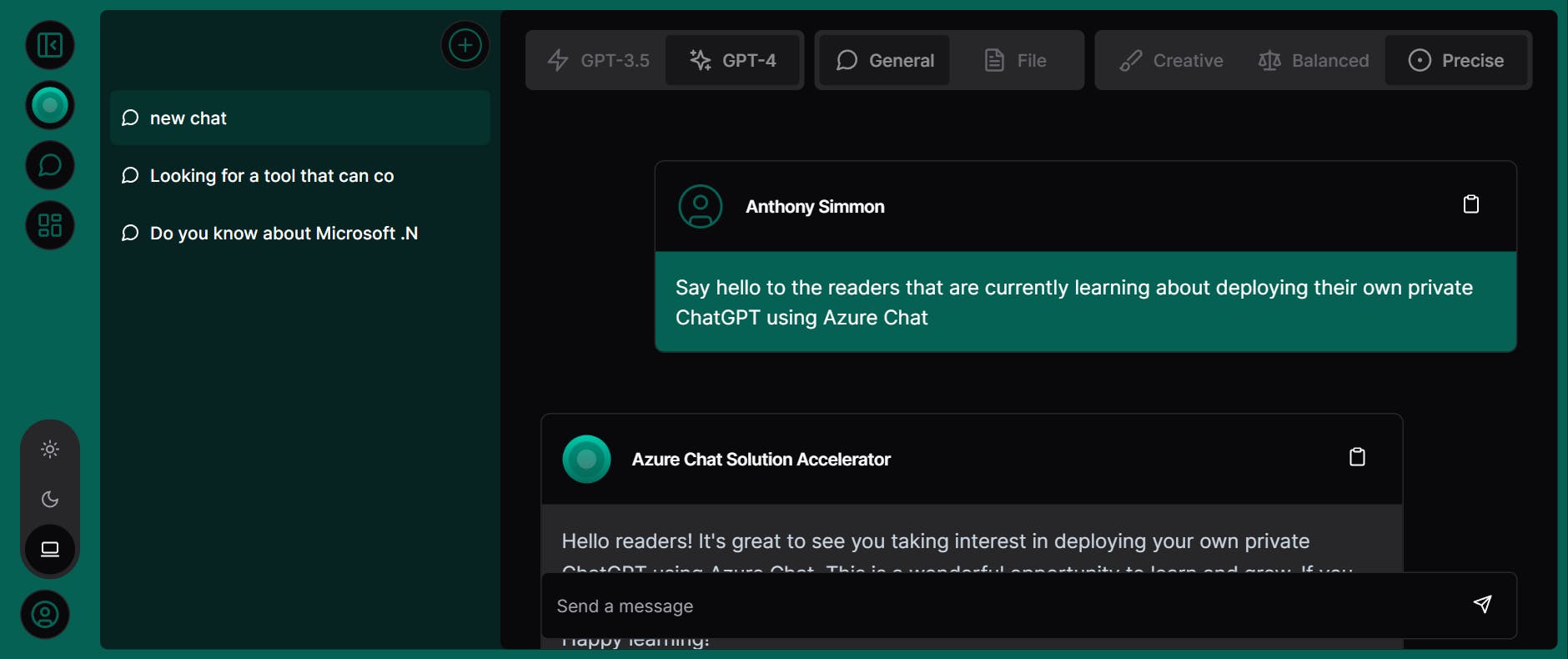

This model name must be provided by the frontend, so a few frontend and backend files need adjustment. To see the edits I made to my version, check out this pull request from my fork. In essence, you'll be creating a chat model selector component for the chat UI and then sending the chosen model name to the backend.

You can see the GPT model selector at the top of this conversation:

With this, users have the choice to use either GPT-3 (gpt-3.5-turbo) or GPT-4 (gpt-4). You can also opt for any other GPT models available via the OpenAI API, such as gpt-4-32k which supports four times more tokens than the default GPT-4 OpenAI model.

Enabling other authentication providers

As I said earlier, Azure Chat supports Microsoft and GitHub authentication out of the box. However, because it's using NextAuth.js, you can easily add other built-in authentication providers. In my case, I wanted my family to log in with their Google accounts. All I needed to do was to modify the auth-api.ts file and add the Google provider:

const configureIdentityProvider = () => {

const providers: Array<Provider> = [];

if (process.env.GOOGLE_CLIENT_ID && process.env.GOOGLE_CLIENT_SECRET) {

providers.push(

GoogleProvider({

clientId: process.env.GOOGLE_CLIENT_ID!,

clientSecret: process.env.GOOGLE_CLIENT_SECRET!,

async profile(profile) {

const newProfile = {

...profile,

id: profile.sub,

isAdmin: adminEmails.includes(profile.email.toLowerCase())

}

return newProfile;

}

})

);

}

// ... rest of the code that configures Microsoft and GitHub providers

In the default configuration, all users from the configured providers can access the app. That's why I added in my fork a mechanism to restrict access to a list of email addresses specified in an environment variable. It's a simple implementation of NextAuth.js's signIn's callback.

Deploying Azure Chat as a containerized application

Containerized applications can be deployed almost anywhere. I personally used Azure App Service with the free Cosmos DB tier in this scenario, but you could host it on premises, on a virtual machine or any cloud provider that supports containers.

Fortunately, the Dockerfile provided in the repository works right out of the box. You can use it as-is. I deploy my Azure Chat fork on Docker Hub using GitHub Actions with this workflow. Don't forget to pass the environment variables to the container.

Next, I integrated Cloudflare as a reverse proxy for an extra layer of security, with a custom DNS entry.

Conclusion

It's your turn to fork microsoft/azurechat. While the documentation mentions enterprise use, I don't think the project is quite ready for that scale, especially since Azure OpenAI isn't generally available yet. However, at this stage, it's excellent for personal use and it's a cheaper replacement to ChatGPT Plus.

Do you find the "pay-as-you-go" model more appealing than a ChatGPT Plus subscription? Have any of you considered deploying Azure Chat for your customers? Do you know other notable "ChatGPT-like" frontends? For those who value privacy, would you prefer hosting your own ChatGPT? I invite you to share your thoughts in the comments!